Uncovering the Dark Side of AI: 7 Hidden Risks, Dangers, and Mitigation Strategies

🤖 Ever wondered about the dark side of AI? While AI promises a brighter future, it also hides potential dangers that could impact your life. Explore the risks, dangers, and how to mitigate them.

From biased algorithms making decisions about your loan applications to AI systems that might compromise your privacy, the risks are real and growing. You’re probably already interacting with AI dozens of times daily – through social media, smart devices, or automated customer service – but do you truly understand what’s at stake? The threats aren’t just about robots taking over; they’re about subtle, pervasive changes that could reshape your world in concerning ways.

In this eye-opening exploration, you’ll discover the seven critical risks of AI technology that experts are warning about, and more importantly, learn how to protect yourself against them. We’ll dive deep into everything from algorithmic bias and privacy concerns to the broader societal impacts that could affect your job security and personal relationships. Let’s uncover these hidden dangers together and explore practical strategies to navigate the AI-driven future safely. 🛡️

Algorithmic Bias and Discrimination

Impact on Minority Communities

You might be surprised to learn that AI systems often perpetuate and amplify existing societal biases against minority communities. These biases manifest in various ways:

- Facial recognition systems showing lower accuracy rates for darker skin tones

- Language processing models misinterpreting cultural contexts

- Content recommendation systems favoring majority perspectives

- Social media algorithms disproportionately flagging minority content

Employment and Financial Decision Making

When you apply for jobs or loans, AI algorithms might be making critical decisions about your future. Here’s how algorithmic bias affects these areas:

| Decision Area | Potential Bias Impact |

|---|---|

| Job Applications | Resume screening systems favoring certain names or educational backgrounds |

| Loan Approvals | Credit scoring models disadvantaging specific zip codes |

| Insurance Rates | Risk assessment algorithms penalizing certain demographics |

| Promotion Decisions | Performance evaluation systems favoring particular communication styles |

Healthcare Disparities

Your access to quality healthcare could be compromised by biased AI systems. Consider these critical areas:

- Diagnostic algorithms trained primarily on data from majority populations

- Treatment recommendation systems not accounting for genetic diversity

- Patient risk assessment tools showing systematic bias against certain groups

- Resource allocation models favoring specific demographic profiles

Legal Implications

You should be aware of the growing legal challenges surrounding algorithmic bias:

| Legal Aspect | Current Development |

|---|---|

| Civil Rights | Increasing cases of discrimination lawsuits |

| Regulatory Compliance | New requirements for AI audit trails |

| Corporate Liability | Companies facing legal consequences for biased algorithms |

| Consumer Protection | Emerging laws protecting against algorithmic discrimination |

The impact of the dark side of AI extends far beyond individual interactions, creating systemic barriers that can affect entire communities. For instance, when you’re denied a loan based on your zip code, this decision might reflect historical redlining practices now embedded in AI algorithms. These biases, part of the dark side of AI, perpetuate inequality by reinforcing existing societal disparities, making it harder for marginalized groups to access opportunities.

Similarly, if you’re from a minority background, you might face additional hurdles in healthcare diagnosis due to AI systems trained on non-representative data sets. The dark side of AI in healthcare can lead to inaccurate diagnoses, unequal treatment, and worse health outcomes for underrepresented communities, showcasing the real-world consequences of AI biases that are often ignored.

These challenges highlight the critical need for better AI oversight and diverse representation in AI development teams. Without such efforts, the dark side of AI will continue to exacerbate existing inequalities, particularly in fields like finance and healthcare.

As we examine privacy and data security concerns in the next section, you’ll see how these risks intersect with other significant dangers of the dark side of AI, creating a complex web of challenges that affect your daily life.

Privacy and Data Security Concerns

Personal Data Exploitation

Your personal data is becoming increasingly vulnerable as AI systems grow more sophisticated in collecting and analyzing information. AI algorithms can piece together seemingly unrelated data points to create detailed profiles of your behavior, preferences, and daily routines. Here’s how your data might be exploited:

- Digital footprint tracking

- Cross-platform data correlation

- Behavioral pattern prediction

- Financial habit analysis

- Location history mapping

Surveillance Capabilities

Modern AI-powered surveillance systems present unprecedented monitoring capabilities that can affect your privacy in ways you might not expect:

| Surveillance Type | Impact on Privacy | Common Applications |

|---|---|---|

| Facial Recognition | Personal identification in public spaces | Security systems, social media |

| Behavioral Analysis | Pattern detection in daily activities | Smart cities, retail analytics |

| Voice Recognition | Audio monitoring and identification | Virtual assistants, call centers |

| Biometric Tracking | Physical characteristic monitoring | Access control, health monitoring |

Data Breaches and Vulnerabilities

Your data faces significant risks due to AI-related vulnerabilities in storage and processing systems. Consider these critical exposure points:

- AI models can be reverse-engineered to extract training data, potentially exposing your personal information

- Machine learning systems may inadvertently memorize sensitive data during training

- Adversarial attacks can manipulate AI systems to reveal protected information

- Integration points between AI systems and databases create additional security gaps

To protect yourself, consider implementing these essential safeguards:

- Regularly review privacy settings across all your digital services

- Use strong, unique passwords and enable two-factor authentication

- Be selective about sharing personal information with AI-powered services

- Monitor your accounts for suspicious activities

- Stay informed about data protection rights in your region

The sophisticated nature of AI systems means that traditional security measures may not be sufficient to protect your data. These systems can process and analyze information at unprecedented scales, making it crucial to understand how your data might be used or misused. Security vulnerabilities in AI systems can expose not just individual data points, but entire patterns of behavior and personal characteristics that you might prefer to keep private.

Now that you understand the privacy implications of AI systems, let’s examine another critical concern: the potential impact of AI on employment and the economy.

Job Displacement and Economic Impact

Vulnerable Industries and Roles

Your job could be at risk as AI continues to advance. The most vulnerable sectors include:

- Customer service and support

- Data entry and administrative work

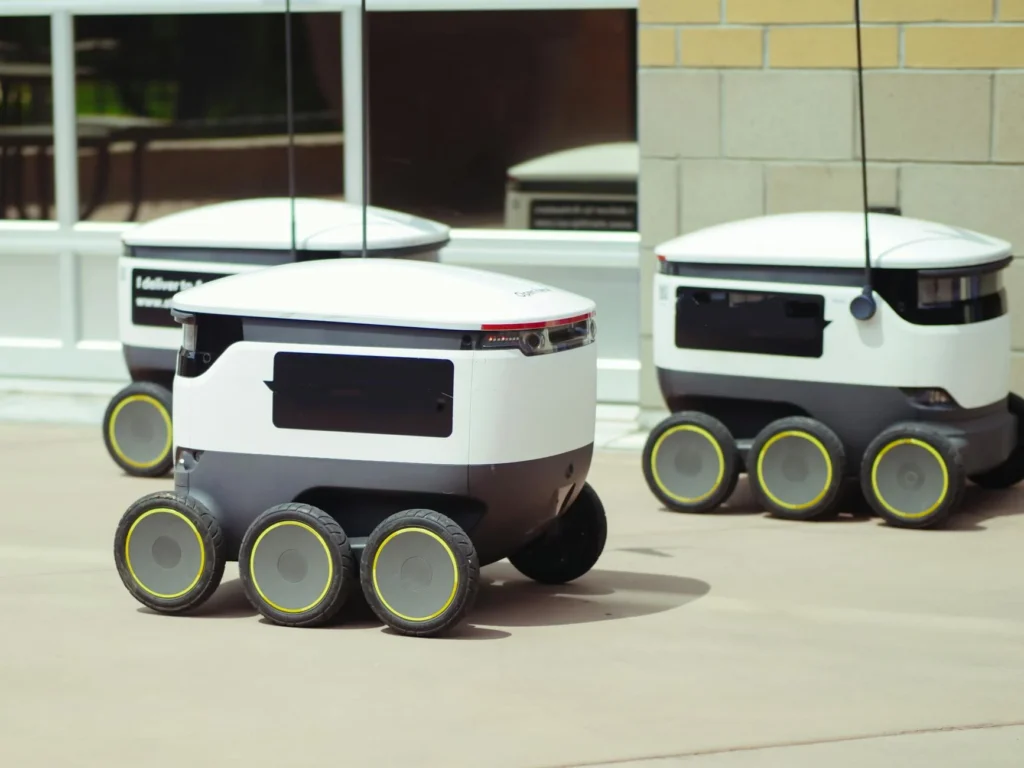

- Transportation and logistics

- Manufacturing and assembly

- Basic financial services

- Retail operations

| Industry | AI Impact Level | Timeline |

|---|---|---|

| Customer Service | High | 1-3 years |

| Manufacturing | Very High | Already happening |

| Transportation | High | 3-5 years |

| Financial Services | Medium | 2-4 years |

Workforce Transition Challenges

You’ll face several obstacles when adapting to AI-driven workplace changes:

- Rapid skill obsolescence

- Limited time for retraining

- Financial constraints during transition

- Age-related adaptation difficulties

- Access to quality education resources

Socioeconomic Implications

Your community and economic landscape will experience significant shifts:

- Income inequality may widen as high-skill workers benefit while others struggle

- Traditional career paths will become less reliable

- Geographic economic disparities could increase

- Social safety nets may require restructuring

- Small businesses might face increased pressure to automate

Skills Gap Evolution

To remain competitive, you’ll need to focus on developing these emerging skills:

- AI Systems Management

- Data Analysis and Interpretation

- Human-AI Collaboration

- Creative Problem-Solving

- Emotional Intelligence

- Complex Decision-Making

| Current Skills | Future Skills Needed |

|---|---|

| Technical Expertise | AI Literacy |

| Basic Digital Skills | Advanced Digital Fluency |

| Traditional Management | Human-AI Team Leadership |

The impact of AI on employment isn’t just about job losses – it’s about fundamental changes to how you work and earn a living. While some roles will disappear, new opportunities will emerge. Your success depends on staying adaptable and developing skills that complement AI rather than compete with it.

You’ll need to identify which aspects of your current role might be automated and start preparing for change now. Consider investing in continuous learning and exploring fields where human skills remain valuable. Remember that soft skills like emotional intelligence, creativity, and complex problem-solving will become increasingly important as AI handles more routine tasks.

Now that you understand the potential impact on jobs and the economy, it’s crucial to examine how AI makes decisions that affect these changes.

AI Decision-Making Transparency

The Black Box Problem

You’re likely familiar with AI making decisions that affect your daily life, from credit approvals to medical diagnoses. However, one of the most concerning aspects is the “black box” nature of these decisions. Unlike human decision-makers who can explain their reasoning, AI systems often operate in ways that even their creators cannot fully understand.

| Aspect | Traditional Decision-Making | AI Decision-Making |

|---|---|---|

| Reasoning Process | Explicit and documentable | Often opaque and complex |

| Explanation Ability | Can provide clear rationale | Limited or impossible |

| Verification | Step-by-step audit possible | Challenging to verify |

| Correction | Easy to identify and fix errors | Difficult to pinpoint issues |

Accountability Issues

When AI makes a mistake, you might wonder who’s responsible. This accountability gap creates significant challenges:

- Difficulty in assigning responsibility for AI decisions

- Lack of clear liability frameworks

- Challenges in proving causation between AI decisions and harmful outcomes

- Limited recourse for individuals affected by AI errors

The absence of clear accountability structures means you might find yourself in situations where an AI system makes a decision affecting your life, but no one can be held responsible for potential negative outcomes.

Regulatory Challenges

Current regulatory frameworks weren’t designed with AI systems in mind, leaving you vulnerable to potential misuse or harm. Key regulatory challenges include:

- Jurisdictional Issues

- Different countries having varying standards

- Cross-border AI applications creating compliance complexity

- Inconsistent enforcement mechanisms

- Technical Complexity

- Regulators struggling to understand advanced AI systems

- Rapid technological advancement outpacing regulation

- Difficulty in creating meaningful oversight

- Implementation Hurdles

- Balancing innovation with protection

- Ensuring compliance without stifling development

- Creating practical audit mechanisms

You need to understand that these transparency issues aren’t just technical problems – they’re fundamental challenges that could affect your rights and well-being. As AI systems become more prevalent in critical decision-making processes, the lack of transparency could lead to unjust outcomes without clear paths for appeal or rectification.

Now that you understand the challenges of AI decision-making transparency, let’s explore another critical concern: the safety implications of autonomous systems operating in the real world.

Autonomous Systems Safety

Self-Driving Vehicle Risks

You need to understand that autonomous vehicles, while promising, present significant safety challenges. These systems must make split-second decisions in complex traffic scenarios, and any malfunction could lead to severe consequences. The main risks include:

- Sensor failures in adverse weather conditions

- Difficulty interpreting unpredictable human behavior

- System vulnerabilities to cyber attacks

- Edge cases not covered in training data

AI-Controlled Infrastructure

Your daily life increasingly depends on AI-controlled infrastructure systems. Smart grids, traffic management systems, and automated building controls all rely on artificial intelligence. Here’s what you should know about the associated risks:

| Infrastructure Type | Primary Risks | Potential Impact |

|---|---|---|

| Smart Grids | Cyber attacks, System failures | Widespread power outages |

| Traffic Systems | Algorithm errors, Communication breakdowns | Traffic chaos, Accidents |

| Building Controls | Sensor malfunctions, Software bugs | Safety hazards, Comfort issues |

Military Applications

You must recognize the serious implications of autonomous military systems. These applications range from surveillance drones to weapon systems, raising critical safety concerns:

- Potential for autonomous weapons to make incorrect targeting decisions

- Risk of systems being hacked or compromised

- Challenges in maintaining human oversight and control

- Difficulties in predicting system behavior in complex combat scenarios

Emergency Response Systems

Your safety during emergencies increasingly relies on AI-powered response systems. While these systems can enhance emergency services, they also present unique challenges:

| Component | Risk Factor | Safety Implication |

|---|---|---|

| Dispatch Systems | Algorithm errors | Delayed response times |

| Resource Allocation | Incorrect prioritization | Inadequate emergency coverage |

| Automated Alerts | False positives/negatives | Public panic or complacency |

To ensure your safety, these systems require:

- Regular testing and validation

- Robust backup systems

- Continuous human monitoring

- Regular updates and maintenance

In critical situations, AI systems must balance speed with accuracy while maintaining safety protocols. System redundancies and fail-safes are essential to prevent catastrophic failures. The integration of human oversight remains crucial in all autonomous systems, especially those where lives are at stake.

Human supervision becomes particularly vital when dealing with AI systems that control critical infrastructure or emergency services. You should always have access to manual override options and clear protocols for system failures.

Now that you understand the safety considerations in autonomous systems, let’s explore how these technological advances affect our psychological well-being and social interactions.

Psychological and Social Effects

Digital Addiction

The increasing integration of AI-powered applications in our daily lives has led to unprecedented levels of digital dependency. You might notice yourself constantly checking AI-driven recommendation systems on social media or streaming platforms, designed specifically to keep you engaged. These algorithms learn your preferences and create a personalized dopamine loop, making it increasingly difficult to disconnect.

| Addiction Warning Signs | Impact on Daily Life |

|---|---|

| Constant app checking | Reduced productivity |

| Loss of time awareness | Sleep disruption |

| FOMO when offline | Decreased physical activity |

| Anxiety without devices | Social isolation |

Human Relationship Impact

AI technologies are fundamentally reshaping how you interact with others. While AI chatbots and virtual assistants offer convenience, they’re subtly altering your social expectations and communication patterns. Consider these concerning trends:

- Decreased tolerance for human imperfection

- Reduced capacity for deep, meaningful conversations

- Preference for digital over face-to-face interactions

- Weakening of empathy and emotional intelligence

The constant exposure to perfectly curated AI responses may leave you feeling disappointed with real human interactions, which are naturally messier and more complex.

Mental Health Concerns

The psychological impact of AI extends beyond addiction and relationship changes. You’re facing new forms of anxiety and stress directly related to AI integration:

- AI Performance Anxiety

- Fear of being outperformed by AI

- Constant pressure to upgrade skills

- Imposter syndrome in the AI age

- Digital Overwhelm

- Information overload

- Decision fatigue from endless choices

- Difficulty distinguishing real from AI-generated content

- Identity and Self-Worth Issues

- Comparing yourself to AI-enhanced standards

- Questioning human value and purpose

- Feeling inadequate in an AI-driven world

The constant exposure to AI systems can create a sense of inadequacy as you compare your capabilities to machine intelligence. You might find yourself struggling with authenticity in a world where AI-generated content becomes increasingly prevalent.

These psychological and social effects highlight the importance of maintaining healthy boundaries with AI technology. Understanding how AI impacts your mental well-being is crucial for developing healthy coping mechanisms. As we explore risk mitigation strategies in the next section, you’ll discover practical ways to protect your psychological well-being while still benefiting from AI advancements.

Risk Mitigation Strategies

Ethical AI Development Framework

You need a robust ethical framework to guide AI development and deployment. Implement these key components:

- Clear ethical guidelines for AI development teams

- Regular ethics reviews and impact assessments

- Diverse development teams to ensure multiple perspectives

- Built-in fairness metrics and testing protocols

Regular Security Audits

Protecting your AI systems requires comprehensive security measures:

| Audit Type | Frequency | Key Focus Areas |

|---|---|---|

| Vulnerability Assessment | Monthly | System weaknesses, entry points |

| Penetration Testing | Quarterly | Active security testing |

| Code Review | Bi-weekly | Source code security |

| Data Protection | Continuous | Privacy compliance |

Human Oversight Implementation

You must maintain meaningful human control over AI systems:

- Establish clear chains of command for AI decision oversight

- Create emergency override protocols

- Implement human-in-the-loop validation for critical decisions

- Set up regular performance reviews by human experts

Public Education Programs

You can help bridge the knowledge gap through structured education initiatives:

- Develop AI literacy programs for different age groups

- Create awareness campaigns about AI capabilities and limitations

- Provide practical training on interacting with AI systems

- Share transparent information about AI risks and benefits

International Cooperation Standards

Your organization should actively participate in global AI governance:

- Adopt internationally recognized AI safety standards

- Share best practices with other organizations

- Participate in cross-border AI safety initiatives

- Support global AI regulation efforts

To effectively implement these strategies, you need to prioritize regular assessment and updates. Create a dedicated team responsible for monitoring and improving these measures. Document all incidents and near-misses to build a comprehensive knowledge base for future reference.

Establish clear metrics to measure the effectiveness of each strategy:

| Strategy | Key Performance Indicators |

|---|---|

| Ethical Framework | Ethics violation reports, Bias incidents |

| Security Audits | Security breaches, Vulnerability fixes |

| Human Oversight | Override instances, Decision accuracy |

| Public Education | Program participation, Understanding metrics |

| International Cooperation | Standards compliance, Partnership engagement |

With these risk mitigation strategies in place, you can better protect against the potential dangers of AI while maximizing its benefits for society.

3 Exciting Machine Learning Technologies Every Beginner Should Explore this!

Conclusion

The rapid advancement of AI technology brings both unprecedented opportunities and significant challenges that demand your attention. From algorithmic bias and privacy concerns to job displacement and safety risks, these hidden dangers can impact various aspects of your life and society at large. Understanding these risks is crucial for making informed decisions about AI adoption and usage.

Your proactive approach to AI risk mitigation is essential. By implementing robust security measures, ensuring algorithmic transparency, and staying informed about AI developments, you can help create a safer and more equitable AI-driven future. Remember, the key to responsible AI usage lies in striking the right balance between innovation and security, while always prioritizing human values and ethical considerations in AI development and deployment.

FAQ

What is the dark side of AI?

What are the 7 hidden risks of AI?

Algorithmic Bias: Discrimination due to biased data or faulty algorithms.

Privacy and Data Security Concerns: How AI systems can exploit personal data.

Job Displacement: AI’s potential to replace human workers in various industries.

AI Decision-Making Transparency: The challenges around understanding AI’s decision-making process.

Autonomous Systems Safety: Risks posed by AI-powered systems like self-driving cars and drones.

Psychological and Social Effects: How AI impacts mental health and social relationships.

Legal and Ethical Issues: The growing need for regulations and ethical considerations in AI development.

How does AI create biases and discrimination?

What can be done to mitigate the risks of AI?

Improving Algorithm Transparency: Ensuring that AI systems are explainable and their decisions can be understood.

Enhancing Data Diversity: Using diverse and representative data to train AI systems.

Strict Regulations: Implementing stronger privacy protections and AI governance frameworks.

AI Ethics Education: Promoting awareness and training for developers to understand the societal impact of AI technologies.

Human Oversight: Ensuring human control and monitoring in critical AI applications, especially in healthcare, law enforcement, and finance.

1 Response

[…] Uncovering the Dark Side of AI: 7 Hidden Risks, Dangers, and Mitigation Strategies You Wnat to learn […]